Prompt Engineering - Basic Principles:

A prompt is the input text fed into a Language Model (LM), and the skill of creating such text to obtain the expected result is known as prompt engineering. This practice involves crafting input that is both clear and concise, helping AI-driven tools to understand the user’s purpose. In summary, for the successful application of this technique, it’s crucial to guarantee that responses generated by AI tools are neither nonsensical nor inappropriate.

In this post, we’ll share with you some of the possibilities for what we can do, as well as best practices of fundamentals of prompt engineering and optimized prompts to get desired results.

Clear and Specific Instructions:

We should express what we want a model to do by providing instructions that are as clear and specific as we can make them. This will guide the model towards the desired output and reduce the chance that you get irrelevant or incorrect responses. Writing longer prompts provides more clarity and context for the model, which can lead to more detailed and relevant outputs.

The first technique that would help to write clear and specific instructions is to use delimiters to indicate distinct parts of the input. Delimiters can be kind of any clear punctuation that separates specific pieces of text from the rest of the prompt. These could be kind of triple quotation marks, XML tags, section titles, or anything that just kind of makes this clear to the model that this is a separate section. Using delimiters is also a helpful technique to try and avoid prompt injections.

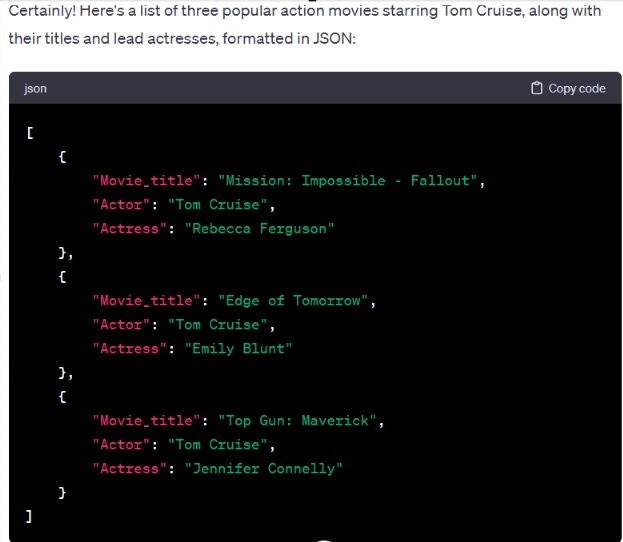

The second technique is to ask for a structured output. So, to make parsing the model outputs easier, it can be helpful to ask for a structured output like HTML or JSON. So, Here is the prompt example:

“”” Generate a list of three best action movie of Tom Cruise along with Movie titles and actress. Provide in JSON format with the following attributes: Movie_title, Actor, Actress “””

The prompt generates a list of three movie titles of Tom Cruise along with movie title and actress and provide a response in JSON format with the attributes movie title, actor, and actress. And that is nice we can read this into a dictionary or a list.

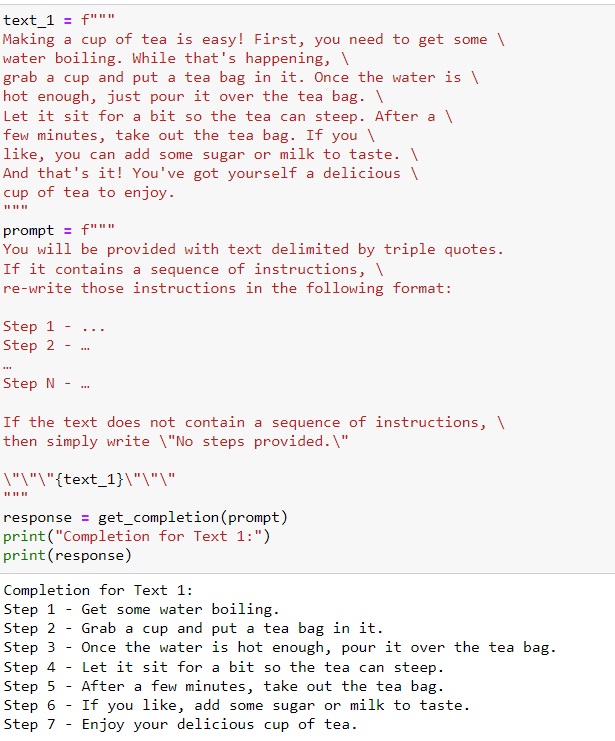

The third tactic would be to maintain the sequence of instructions. Here is just a paragraph describing the steps to make a cup of tea and will copy over our prompt. If it contains a sequence of instructions, rewrite those instructions in the following format and then just the steps written out. If the text does not contain a sequence of instructions, then simply write, no steps provided. We can see that the model was able to extract the instructions from the text.

Model Time to Think:

The Second principle is to give the model time to think. If a model is making reasoning errors by rushing to an incorrect conclusion, you should try reframing the query to request a chain or series of relevant reasoning before the model provides its final answer. Another way to think about this is that if you give a model a task that’s too complex for it to do in a short amount of time or in a small number of words, it may make up a guess which is likely to be incorrect. And this would happen for a person too. If you ask someone to complete a complex math question without time to work out the answer first, they would also likely make a mistake. So, in these situations, we can instruct the model to think longer about a problem, which means it’s spending more computational effort on the task.

Length of Prompts:

The length of prompts, emphasizes that while longer prompts can offer more detailed context and background information, shorter prompts are typically more efficient for eliciting swift responses. The effectiveness of a prompt’s length is contingent on the specific requirements of the task at hand. In some situations, a more extensive and descriptive prompt is beneficial to ensure clarity and comprehensiveness. However, in scenarios where quick answers or reactions are desired, a brief and to-the-point prompt can be more effective.